2022-September-14

Did you know? You can share this story using the social media icons on the upper left. Use the hashtag #WeAreCisco. You can also rate or comment on the story below.

Using Tech for Good

BY ANNIE YING · DATA SCIENCE MANAGER · CANADA

WITH HELEN GALL AND ELLEN THORNTON

5 MINUTE READ · 7 MINUTE LISTEN

Editor’s Note: The following story involves a discussion about mental health, including suicide, which might be upsetting to some readers. For mental health resources and support, visit the #SafeToTalk online communityor contact the Employee Assistance Program.

I never gave much thought to how we talk about suicide — or how artificial intelligence (AI) could help save the lives of those at risk — until my former colleague Jennifer Redmon told me about the death of her friend Erika.

As we spoke, Jennifer shared how language used in our everyday lives, such as the term “committed suicide,” can harm those who are at risk or have been affected by suicide.

That day, I joined Jennifer’s fight against suicide, matching our conviction with our technical talents. And while Jennifer has since moved on from Cisco, we remain in touch and committed to helping those at risk.

Today, more than 100 Cisco volunteers join me in this effort. As we recognize September is suicide prevention month, we encourage you to remember language is a contagion for suicide.

Read on for more about our work and what you can do to help.

First things first: Words matter

It wasn’t long ago when folks would use the term “committed suicide” without a second thought. That practice has begun to change as people recognize that suicide is an illness, rather than a moral failing, like “committing a crime” or “committing a sin.”

Mental health experts and suicide prevention specialists now recommend using the phrase, “died by suicide.” Just as someone can die of heart disease, someone can “die by suicide.” Using this phrase allows us to communicate more compassionately — and accurately — about those who have passed.

Reporting suicide responsibly

Broadly speaking, healthcare organizations and private sector corporations are adopting this shift in language. But more work remains to be done, especially in the media.

In fact, journalists — however well-intentioned — risk promoting copy-cat suicides through their reporting. The problem is so widespread that over a decade ago, the World Health Organization (WHO) made reporting on suicide a priority focus.

The WHO’s work includes creating and maintaining explicit guidelines on how journalists cover deaths by suicide. These include:

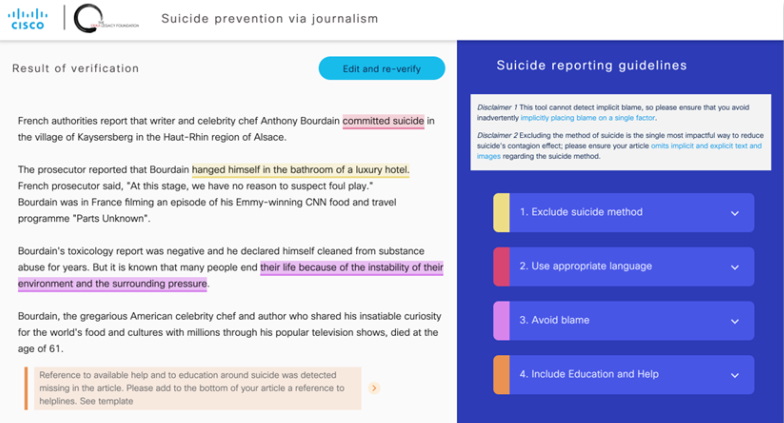

- Using appropriate language, as noted above

- Omitting suicide methods to avoid copycat suicides

- Avoiding blame

- Including education and helplines to address people at risk who may be triggered

Saving lives with artificial intelligence

In November 2019, at our Cisco Vancouver office, Jennifer and I formed a partnership to develop a technical solution to increase adoption of the WHO guidelines. This began with a small workshop where we learned from psychology researchers how harmful words can be to at-risk individuals.

This effort led to the development of a tool that uses AI to help reporting about suicide. A journalist or content creator working on an article mentioning suicide can use the tool — which works like a spelling or grammar check — to compare against the WHO guidelines.

I am proud to share that our tool is now referenced by the World Health Organization’s LIVE LIFE: An implementation guide for suicide prevention in countries. This effort also won the Cisco 2020 Data Science Award for Benefitting Humanity, and was presented by AI legend Dr. Andrew Ng.

On the technical side, I’m proud that it’s powered by one of the latest deep learning technical BERT and was published as a peer-reviewed research paper at the International AI for Social Good Workshop in January 2021.

Volunteers at Cisco step up

This initiative would not have been possible without more than 100 Cisconians who volunteered to support us. Their hundreds of hours spent labeling data helped us train the machine learning model, which the tool was later built upon.

In addition, Dr. Dan Reidenberg, one of the creators of the WHO’s guidelines, has been involved with the education and design of the tool. And our partnership with Save.org, the first organization in the U.S. dedicated to the prevention of suicide, also helped all of this come together.

We have a lot of work ahead

The term “committed suicide” is still the norm for many people. When we ran our checker on the 1,700 media articles about suicide the Cisco volunteers labeled, only 3 percent of the articles had all four guidelines satisfied.

The journey towards a better world where suicide and mental illnesses are not stigmatized is ongoing. But I am hopeful.

Check out our tool and follow these four rules when you talk or write about suicide:

- Exclude suicide method: Don’t share a person's suicide method; doing so leads to increased copycat suicides disproportionately by the same method.

- Use appropriate language: Talk about suicide as you would a terminal illness, e.g., “died by suicide” rather than “committed suicide.”

- Avoid blame or speculation: We often trivialize and confuse the trigger, for example a life event or a person with a root cause, such as mental illness.

- Include education and help information: Always offer a crisis line, which vary by region and location.

Employee Resources

- Wellbeing resources are available on the Global Wellbeing SharePoint site.

- Join our #SafeToTalk online community for articles, videos, tips, and support.

- Contact the Employee Assistance Program (EAP) to work through transitions, work-related stress, and other concerns.

- Check out the Mental Well-Being Degreed Pathway if you’re anxious, depressed, grieving a loss, or want to stay connected.

- Donate to organizations in Bright Funds that advocate for mental health.

Related Links

- Reporting on suicide

- Suicide Awareness Voices of Education (SAVE)

- Our Impact - CSR & Time2Give

- Cisco Purpose Report 2021

Connect everything. Innovate everywhere. Benefit everyone.

Share your thoughts!

Log in to rate and commentShare your thoughts on the story here!